Dyslexia is a neurodevelopmental condition estimated to affect between 5–10% of people living in most countries, irrespective of their educational and cultural background. Dyslexic individuals experience persistent difficulties with reading and writing, often struggling to identify words and spell them correctly.

Past studies with twins suggest that dyslexia is in great part heritable, meaning that its emergence is partly influenced by genetic factors inherited from parents and grandparents. However, the exact genetic variants (i.e., small differences in DNA sequences) linked to dyslexia have not yet been clearly delineated.

Researchers at University of Edinburgh, the Max Planck Institute for Psycholinguistics and various other institutes recently carried out the largest genome-wide association study to date exploring the genetic underpinnings of dyslexia. Their paper, published in Translational Psychiatry, identifies several previously unknown genetic loci that were found to be linked to an increased likelihood of experiencing dyslexia.

“This research was motivated by the longstanding challenge of identifying the genetic basis of dyslexia—a common and often inherited learning difference, characterized by difficulties with reading, spelling or writing,” Hayley Mountford, Research Fellow at University of Edinburgh’s School of Psychology, told Medical Xpress.

“Although prior studies had revealed some genetic associations, research into dyslexia is still far behind that of autism or attention deficit hyperactivity disorder (ADHD), and the biological mechanisms remained unclear. The recent availability of summary statistics from two large genome-wide association studies (GWAS) allowed us to combine them in a more powerful meta-analysis.”

The primary goals of the recent study by Mountford and her colleagues were to uncover new genes that are linked with dyslexia, while also gaining new insight into the biological basis of differences in reading ability. In addition, the researchers wished to explore the possibility that people’s genetic scores (also known as a polygenic index) could predict reading difficulties.

As part of their study, they also tried to determine whether there is a recent evolutionary selection in dyslexia. Finally, the team hoped to reduce the stigma associated with dyslexia by shedding new light on its underlying biological processes.

“We started by bringing together two large genetic datasets from previous studies: one from the GenLang Consortium, which includes detailed reading ability test data, and another from 23andMe, which included more than 50,000 people reporting a dyslexia diagnosis. In total, we analyzed genetic data from more than 1.2 million people,” explained Mountford.

“We used a method called MTAG (Multi-Trait Analysis of GWAS), which allows you to jointly analyze related traits, such as reading ability and dyslexia diagnosis, to detect more genetic associations than could be found by analyzing them separately.”

After they identified the associated genetic variants, the researchers tried to better understand their contribution to the biology of dyslexia using bioinformatic tools. In addition, they created a polygenic index, which estimates the genetic risk that an individual will develop a specific condition.

Mountford and her colleagues subsequently tried to determine how well these estimates predicted a group of children’s reading performance. Finally, they examined ancient DNA collected over the past 15,000 years to explore how these genes have evolved over time.

“Our study represents the largest and most powerful genetic analysis of dyslexia to date,” said Mountford. “We identified 80 regions associated with dyslexia, including 36 regions which were not previously reported as significant. Of these 36 regions, 13 were entirely novel with no prior suggestive association with dyslexia. This significantly expands our understanding of the genetic architecture of reading-related traits.”

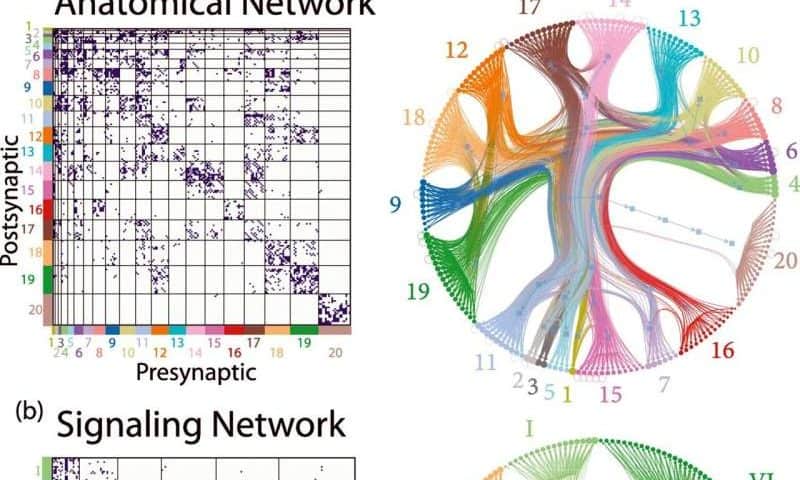

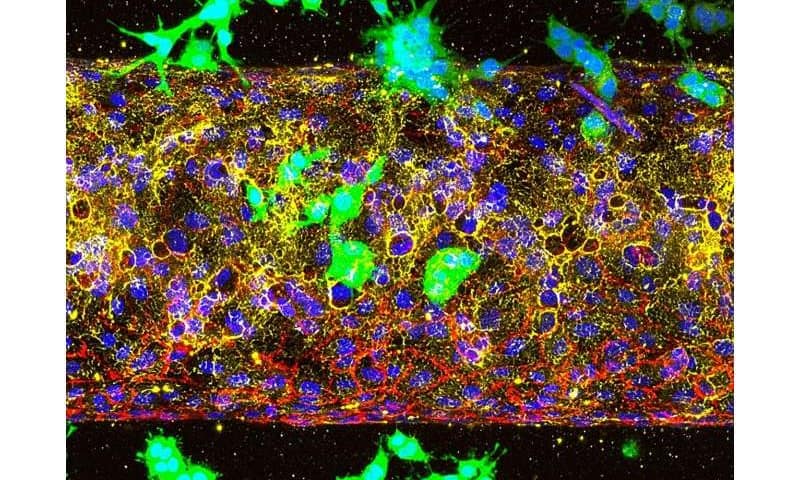

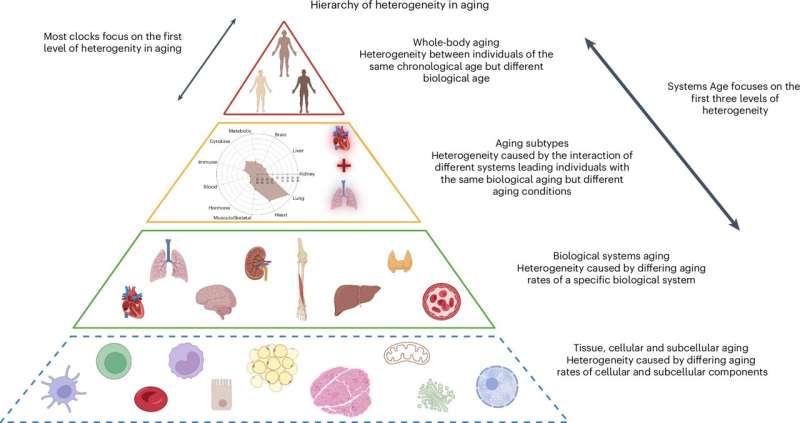

Interestingly, the researchers found that many of the genes they uncovered are active in brain regions that are known to still be developing at the early stages of life. In addition, they appeared to support signaling and the establishment of communication points (i.e., synapses) between neurons.

“Our polygenic index was able to explain up to 4.7% of the variance in reading ability in an independent sample, and while modest, this is a meaningful step toward potential early identification of reading difficulties,” said Mountford. “We found no evidence of recent evolutionary selection for or against dyslexia associated genes, suggesting it has not been affected by any major social or societal changes that have taken place in the past 15,000 years in northern Europe.”

The recent work by Mountford and her colleagues greatly contributes to the understanding of dyslexia and reading abilities in general, shedding new light on their biological underpinnings. The researchers were able to identify 13 new genetic loci linked to dyslexia, which were implicated in early brain development processes.

As part of their next studies, they plan to conduct cross-trait genetic analyses. These analyses would, for instance, allow them to learn whether some dyslexia-related genes overlap with genes associated with ADHD, language impairments and other neurodevelopmental conditions, while also identifying condition-specific genes.

“We also plan to explore how genetic risk for dyslexia influences outcomes across the lifespan, including education, career, and mental health,” added Mountford.

“Concurrently, we will try to enhance polygenic scores by incorporating more diverse samples and integrating environmental factors like early education and home literacy environments. Finally, we plan to conduct a follow-up study investigating how the newly identified genes influence brain development, using cellular models and imaging genetics.”