A digital pathology collaboration between Microsoft, the University of Washington and Providence health network aims to overcome a few of the obstacles to fully implementing artificial intelligence in the field of cancer diagnostics—and in some cases, through sheer scale.

The team of researchers put forward a machine learning model that, according to Providence, is built upon one of the largest AI training efforts to date in real-world, whole-slide tissue analysis.

That includes 1.3 billion pathology images derived from more than 171,000 scanned slides provided by the health system—which pegs the dataset’s size as five to 10 times larger than other curated collections, such as The Cancer Genome Atlas.

The slides were taken from more than 30,000 patients and span 31 major tissue types, while the project as a whole also includes radiology scans, genomics results and patient health records.

“This transformative work is the result of focused efforts to overcome three major challenges that have stymied previous computational pathology models from widely being applied in the clinical setting: shortage of real-world data, inability to incorporate whole-slide modeling and lack of accessibility,” Ari Robicsek, Providence’s chief analytics and research officer, said in the health system’s blog post.

Previous computer vision programs have also struggled with comprehending the immense amount of information that can come from a standard slide—with just one high-resolution file spanning multiple gigabytes—and instead, they break the image up into thousands of separate tiles for analysis.

To digest it all, researchers adapted Microsoft’s LongNet program, which operates similarly to large language models, but with the ability to tackle much longer sequences of data. For example, a written prompt to an AI chatbot may be read by the computer as a sequence made of dozens of interconnected tokens—while LongNet is built to handle as many as 1 billion tokens at once.

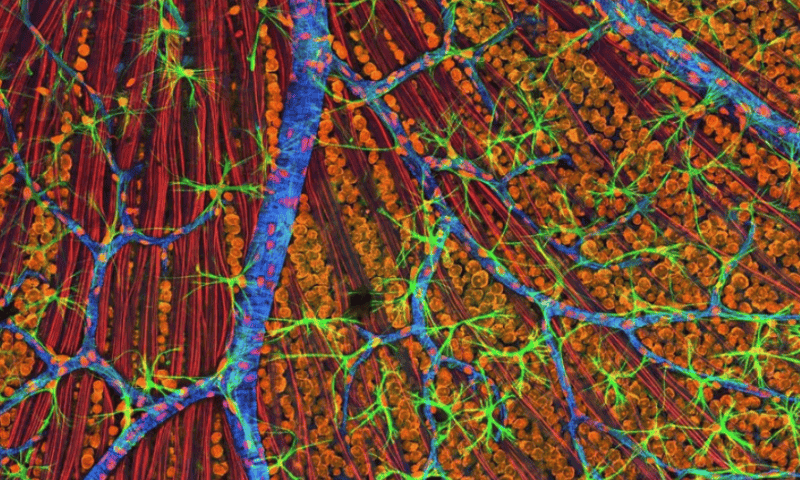

The result is Prov-GigaPath, an AI pathology model designed to read patterns across the entire slide, with the goal of improving predictions about a patient’s particular cancer mutations and their subtypes as well as the effects the tumor microenvironment may have on different therapies.

The researchers’ work was published in the journal Nature, and showed Prov-GigaPath could accurately complete 17 typical pathology tasks and nine subtyping tasks—including predicting pan-cancer gene mutations and outperforming other digital pathology methods.

“The rich data in pathology slides can, through AI tools like Prov-GigaPath, uncover novel relationships and insights that go beyond what the human eye can discern,” said Carlo Bifulco, chief medical officer of Providence Genomics.

The researchers said the next steps would be to leverage the AI model to develop new diagnostic applications, including in studies of the tumor microenvironment and aiding treatment selection.